페이스북 메타(Facebook Meta) AI 의 오픈소스 LLM , LLaMA (Large Language Model Meta AI) 2

LLaMA : 2023-02 발표

LLaMA-2 : 2023-07

- 파라미터 수 : 7B, 13B, 70B

//-----------------------------------------------------------------------------

성능

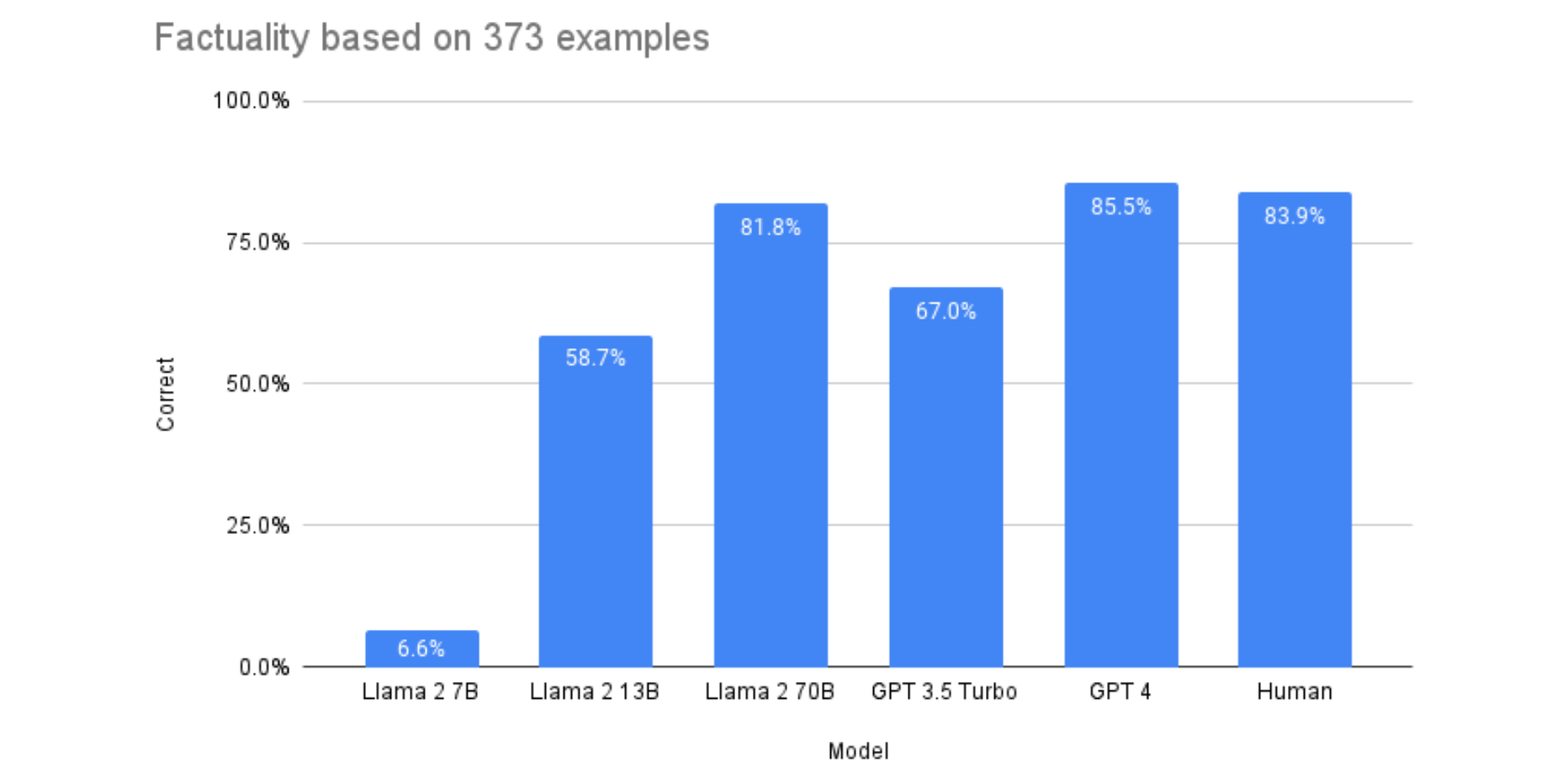

Llama 2 13B가 ChatGPT(OpenAI GPT 3.5 Turbo)와 비등한 성능

Llama 2 is about as factually accurate as GPT-4 for summaries and is 30X cheaper

https://www.anyscale.com/blog/llama-2-is-about-as-factually-accurate-as-gpt-4-for-summaries-and-is-30x-cheaper?ref=promptengineering.org

//-----------------------------------------------------------------------------

llama2 채팅 데모

https://www.llama2.ai/

- 저장소

https://github.com/facebookresearch/llama

//-----------------------------------------------------------------------------

모델 용량(크기)

//-------------------------------------

llama

7B - 13G

13B - 25G

30B - 61G

65B - 122G

//-------------------------------------

llama 2

llama-2-7b : 13G

llama-2-7b-chat : 13G

llama-2-13b : 25G

llama-2-13b-chat : 25G

llama-2-70b : 129G

llama-2-70b-chat : 129G

- 주의! 4bit 도 130G

https://huggingface.co/4bit/Llama-2-70b-chat-hf

//-------------------------------------

TheBloke Llama-2 GPTQ 버전 - 용량 작음

https://huggingface.co/TheBloke/Llama-2-7B-Chat-GPTQ

https://huggingface.co/TheBloke/Llama-2-7B-GPTQ

파라미터 - 파일크기 - VRAM

7B - 4G - 6G

13B - 7G - 10G

70B - 35G - 38G ( 16G도 작동은 하지만 매우 느림)

- 사용법

https://github.com/oobabooga/text-generation-webui 이용 추천

> git clone https://github.com/oobabooga/text-generation-webui

> cd text-generation-webui

> start_windows.bat --listen-port 17860

로딩이 완료 되면, 브라우저로 접속 http://127.0.0.1:17860/

Model 탭 -> Download model or LoRA 입력창에

https://huggingface.co/TheBloke/Llama-2-7B-Chat-GPTQ 입력하고 Download 버튼 선택

모델 다운로드가 완료 되면 모델 선택후 Load 버튼 선택

Chat 탭으로 이동해 질문 입력

Is this world a simulation?

//-----------------------------------------------------------------------------

< 참고 >

llama2 정식 모델 사용법

llama2 사용신청 - 사용신청을 해야 모델을 다운로드 가능

https://ai.meta.com/resources/models-and-libraries/llama-downloads/

- 신청후 접수 처리에 1시간 이상 걸릴수 있음

메일의 지시대로 download.sh 다운받아 실행

https://github.com/facebookresearch/llama/blob/main/download.sh

- huggingface 로그인을 위해 huggingface 토큰 생성

https://huggingface.co/settings/tokens

- huggingface에 로그인 설정

huggingface-cli login

Token : 프롬프트가 나타나면 Shift+Insert 키로 토큰 붙여넣기 , 화면에 표시는 안됨

- 옵션? 저장소에서 다운로드 신청

https://huggingface.co/meta-llama/Llama-2-70b-chat-hf

- 신청후 접수 처리에 1시간 이상 걸릴수 있음

//-------------------------------------

llama 실행 예제 소스 코드 저장소

https://github.com/facebookresearch/llama-recipes

git clone https://github.com/facebookresearch/llama-recipes

cd llama-recipes

pip install --extra-index-url https://download.pytorch.org/whl/test/cu118 llama-recipes

'AI' 카테고리의 다른 글

| google cloud vertex ai API로 text 생성 Gemini Pro 사용법 (0) | 2023.12.14 |

|---|---|

| 철학적인 질문 (0) | 2023.11.08 |

| MS 오픈 소스 LLM, Phi 1.5 사용법 (0) | 2023.11.08 |

| [SD] ControlNet QR Code 사용법, 이미지에 이미지 삽입하는 방법 (0) | 2023.10.15 |

| [Stable Diffusion] AnimateDiff-CLI Prompt-Travel 사용방법 (0) | 2023.09.24 |