마이크로소프트 microsoft(MS) open source ai LLM(Large Language Model), Phi

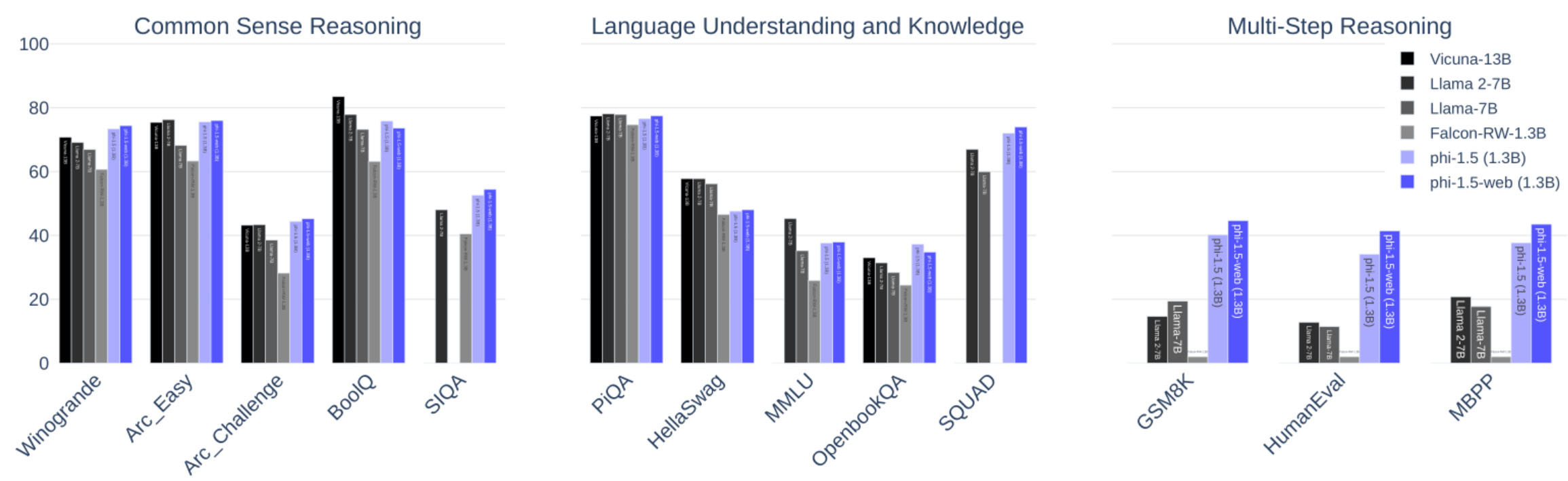

13억개(1.3B) 파라미터로 5배 이상인 Llama 2-7B 와 맞먹는 성능

Phi-1 : 2023-07 발표

Phi-1.5 : 2023-09 발표

https://huggingface.co/microsoft/phi-1_5

https://arxiv.org/abs/2309.05463

//-----------------------------------------------------------------------------

실행 소스 코드 샘플

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

torch.set_default_device("cuda")

model = AutoModelForCausalLM.from_pretrained("microsoft/phi-1_5", trust_remote_code=True)

tokenizer = AutoTokenizer.from_pretrained("microsoft/phi-1_5", trust_remote_code=True)

str = "Why does suffering happen?"

inputs = tokenizer(

str,

return_tensors="pt",

return_attention_mask=False,

)

outputs = model.generate(**inputs, max_length=200)

text = tokenizer.batch_decode(outputs)[0]

print(text)반응형

'AI' 카테고리의 다른 글

| 철학적인 질문 (0) | 2023.11.08 |

|---|---|

| 메타 LLaMA 2 사용법 (0) | 2023.11.08 |

| [SD] ControlNet QR Code 사용법, 이미지에 이미지 삽입하는 방법 (0) | 2023.10.15 |

| [Stable Diffusion] AnimateDiff-CLI Prompt-Travel 사용방법 (0) | 2023.09.24 |

| [Stable Diffusion] AnimateDiff-CLI 사용방법 (0) | 2023.09.24 |