LLaMA (Large Language Model Meta AI) 4bit 실행 방법

- text-generation-webui 이용 실행

//-----------------------------------------------------------------------------

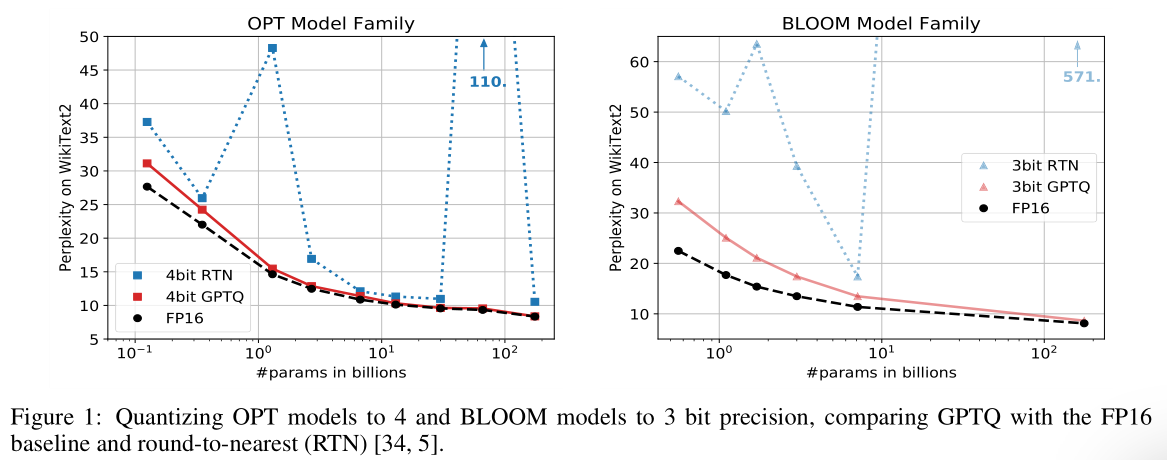

원본 16bit에 비해서 4bit가 성능저하가 별로 없으면서 적은 VRAM에서 실행 가능

https://rentry.org/llama-tard-v2

8bit

| Model | VRAM Used |

Minimum Total VRAM |

RAM /Swap to Load |

| LLaMA-7B | 9.2GB | 10GB | 24 GB |

| LLaMA-13B | 16.3GB | 20GB | 32GB |

| LLaMA-30B | 36GB | 40GB | 64GB |

| LLaMA-65B | 74GB | 80GB | 128GB |

//

4bit

| Model | VRAM Used |

Minimum Total VRAM |

RAM /Swap to Load |

| LLaMA-7B | 3.5GB | 6GB | 16 GB |

| LLaMA-13B | 6.5GB | 10GB | 32 GB |

| LLaMA-30B | 15.8GB | 20GB | 64 GB |

| LLaMA-65B | 31.2GB | 40GB | 128 GB |

//

모델 파일 크기

| 원본 | LLaMA-HFv2 | LLaMA-HFv2 4bit |

|

| 7B | 12.6 | 12.5 | 3.5 |

| 13B | 24.2 | 36.3 | 6.5 |

| 30B | 60.6 | 75.7 | 15.7 |

| 65B | 121.6 | 121.6 | 31.2 |

//-----------------------------------------------------------------------------

* LLaMA 변환한 모델 다운로드(4bit 포함)

https://huggingface.co/decapoda-research

- 모델 다운로드 받는 방법

https://aituts.com/llama/

https://rentry.org/llama-tard-v2

//-----------------------------------------------------------------------------

< 설치 - 윈도우 WSL 환경>

- python 가상 환경 설치

conda create -n textgen python=3.10

conda activate textgen

- pytorch 설치

conda install cuda pytorch torchvision torchaudio pytorch-cuda=11.7 -c pytorch -c nvidia/label/cuda-11.7.0

- pytorch v2.0 이 설치되는데 정상 작동

- pytorch 설치 확인 테스트

python -c "import torch; print(torch.__version__, torch.cuda.is_available())"

- text-generation-webui 다운로드

git clone https://github.com/oobabooga/text-generation-webui.git

cd text-generation-webui

- GPTQ-for-LLaMa 다운로드

md repositories

cd repositories

git clone https://github.com/qwopqwop200/GPTQ-for-LLaMa.git

- text-generation-webui 필요 패키지 설치

cd ..

pip install -r requirements.txt

//-------------------------------------

- 패키지 설치

conda install ninja

pip install chardet

pip install cchardet

- 에러 메시지

error: subprocess-exited-with-error

...

gcc: fatal error: cannot execute ‘cc1plus’: execvp: No such file or directory

- 해결 방법

sudo apt-get install -y g++ build-essential

//-------------------------------------

- GPTQ-for-LLaMa 설정, 설치

cd repositories/GPTQ-for-LLaMa

export DISTUTILS_USE_SDK=1

pip install -r requirements.txt

python setup_cuda.py install

- 에러 메시지

subprocess.CalledProcessError: Command '['which', 'g++']' returned non-zero exit status 1.

- 해결 방법

sudo apt-get install build-essential

//-------------------------------------

실행

python server.py --model llama-7b-4bit --gptq-bits 4

- 에러 메시지

ValueError: Tokenizer class LLaMATokenizer does not exist or is not currently imported.

- 해결 방법

\text-generation-webui\models\llama-7b-4bit\tokenizer_config.json 파일 수정

LLaMATokenizer -> LlamaTokenizer

//-----------------------------------------------------------------------------

< 에러 해결 >

- 에러 메시지

GPTQ_loader.py", line 55,

TypeError: load_quant() missing 1 required positional argument: 'groupsize'

- 해결 방법 : GPTQ-for-LLaMa의 변경사항을 text-generation-webui이 반영 안한 상태. GPTQ-for-LLaMa의 git commit 을 예전으로 변경

GPTQ-for-LLaMa 폴더로 이동

git checkout 468c47c01b4fe370616747b6d69a2d3f48bab5e4

//-----------------------------------------------------------------------------

< 참고 >

How to Run a ChatGPT Alternative on Your Local PC

https://www.tomshardware.com/news/running-your-own-chatbot-on-a-single-gpu

- 윈도우 환경이라면

Visual Studio 2019 빌드 도구 설치

https://learn.microsoft.com/en-us/visualstudio/releases/2019/release-notes

- 다운로드후 C++ 만 설치

//-------------------------------------

https://www.reddit.com/r/LocalLLaMA/comments/11o6o3f/how_to_install_llama_8bit_and_4bit/

'AI' 카테고리의 다른 글

| AI Text to Speech "coqui-ai /TTS" 사용법 (0) | 2023.04.28 |

|---|---|

| 파이토치(PyTorch) 설치 방법 (Nvidia CUDA용) (0) | 2023.04.01 |

| text-generation-webui 사용법 (0) | 2023.03.21 |

| (Meta AI) LLaMA 사용법 (0) | 2023.03.21 |

| GPT-J 사용법 (0) | 2023.03.03 |